Introduction

The AI landscape is witnessing a revolutionary shift with the advent of Dolphin 2.5 Mixtral-8x7b, a model that stands as a testament to the power of open-source collaboration, the efficiency of mixture-of-experts (MOE) architecture, and the bold new territory of uncensored AI technology.

Understanding Dolphin 2.5 Mixtral-8x7b

Developed as a streamlined version of the formidable GPT-4, Dolphin 2.5 Mixtral-8x7b retains sophisticated capabilities while presenting a more compact and accessible format. Its unique streamlined structure includes 8 experts, each holding 7 billion parameters, making it a lean yet potent tool in the AI arsenal. More on Mixtral 8x7B.

The Open-Source Revolution

The open-source nature of Dolphin 2.5 Mixtral-8x7b democratizes cutting-edge technology. Mistral AI has made the model available via torrent links, allowing a broad spectrum of developers and enthusiasts to access and contribute to its development, fostering innovation and diverse applications.

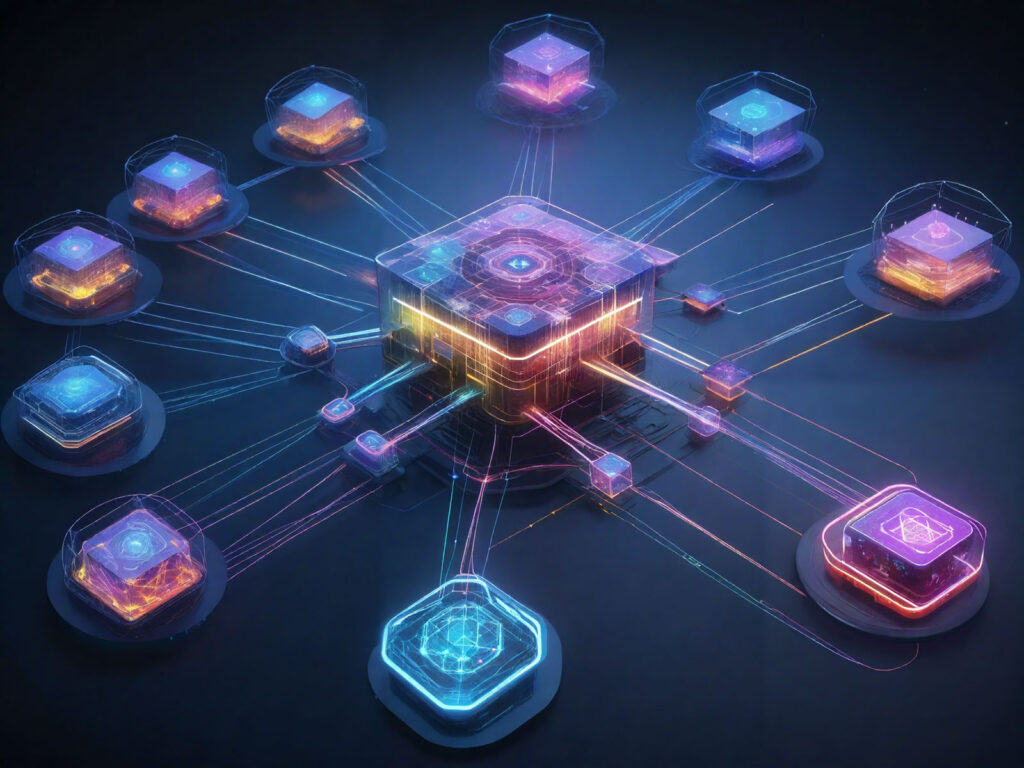

Mixture-of-Experts (MOE) Architecture

This model’s MOE architecture sets it apart from conventional LLM structures. By engaging only 2 experts per token for inference, it strikes an optimal balance between performance and resource utilization. This architecture not only enhances the model’s efficiency but also contributes to its scalability and adaptability to various tasks.

The Uncensored Nature of Dolphin 2.5 Mixtral-8x7b

At its core, Dolphin 2.5 Mixtral-8x7b is an ‘uncensored model,’ operating without the conventional filters and alignments that guide AI responses. This approach results in raw, authentic answers that may challenge prevailing norms and perspectives, opening new possibilities for diverse applications while demanding a heightened sense of responsibility from users.

Real-World Applications and Accessibility

Accessible through platforms like Anakin AI and Replicate, Dolphin 2.5 Mixtral-8x7b offers a user-friendly interface and a versatile range of applications. From interactive conversations to complex problem-solving, the model’s multi-domain knowledge base makes it a valuable asset across various sectors.

Enhanced Capabilities for Coding Tasks

Dolphin 2.5 Mixtral-8x7b excels in coding tasks, thanks to its fine-tuned model based on Mixtral and trained with additional datasets like Synthia, OpenHermes, and PureDove.

Impact on the AI Industry

Developed by the Paris-based startup Mistral AI, Dolphin 2.5 Mixtral-8x7b is changing the AI industry by challenging existing standards and promoting democratization in AI technology. This model goes beyond adding to AI achievements; it opens new avenues for innovation and exploration.

Technical Configuration

Mixtral 8x7B operates on a sophisticated MOE model. Each of its 8 experts, with 7 billion parameters, optimizes processing efficiency and speed. This configuration includes high-dimensional embedding space, multiple layers, and a substantial number of heads for attention mechanisms, making it suitable for a variety of applications.

Methods to Run Mixtral 8x7B

There are currently three methods to run Mixtral 8x7B, each with its unique implementation and requirements. These include using the original Llama codebase, Fireworks.ai, and Ollama, offering diverse ways for users to interact with and utilize the model.

Performance Evaluation

While Dolphin 2.5 Mixtral-8x7B showcases significant advancements, it also reveals areas needing improvement. Its performance, particularly in multiple-choice question performance and instruction compliance, highlights the need for ongoing development and fine-tuning.

Conclusion

Dolphin 2.5 Mixtral-8x7b is not just an advancement in AI technology; it’s a harbinger of a new era in AI development. Its open-source, uncensored nature paves the way for more collaborative, innovative, and responsible AI solutions.

We invite our readers to explore Dolphin 2.5 Mixtral-8x7b further. Your insights and feedback are invaluable in shaping the future of AI. Join the conversation and be part of this exciting journey in technology.