Kimi K2: The Dawn of Open-Source Agentic AI

Estimated Reading Time: 7 minutes

Key Takeaways

- Kimi K2 is Moonshot AI’s groundbreaking open Mixture-of-Experts (MoE) language model, featuring a trillion total parameters with only 32 billion “activated” per inference.

- It democratizes agentic AI, offering both foundational (Kimi-K2-Base) and instruction-tuned (Kimi-K2-Instruct) variants for diverse applications.

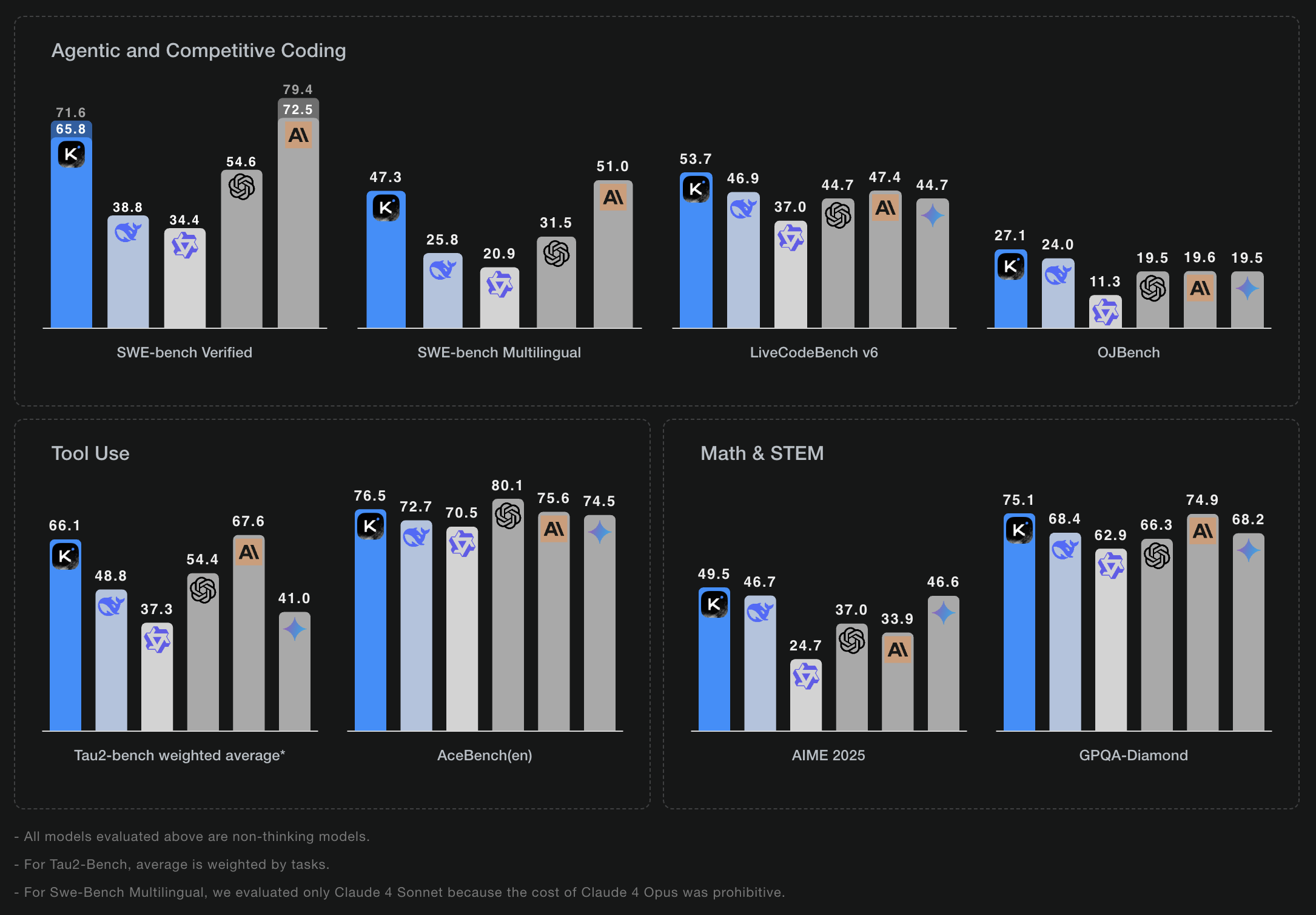

- The model excels in coding, mathematics, and particularly agentic tasks, achieving top scores on benchmarks like SWE Bench Verified, Tau2, and AceBench.

- Available under a permissive modified MIT license, Kimi K2 supports flexible deployment, including self-hosting on high-end GPUs (e.g., NVIDIA B200) or even quantized versions on consumer hardware like Apple M3 Ultras.

- Kimi K2 represents a significant advancement for open-source AI from China, fostering innovation in autonomous systems and large-scale fine-tuning.

Table of Contents

- Kimi K2: Ushering in the Era of Open Agentic AI

- Understanding Kimi K2: A Paradigm Shift in Open-Weight Models

- Strategic Impact and the Future of Agentic AI

- Conclusion

- FAQ

Kimi K2: Ushering in the Era of Open Agentic AI

The landscape of artificial intelligence is evolving at an unprecedented pace, with new models pushing the boundaries of what’s possible. For businesses and researchers navigating the complexities of AI adoption, finding powerful, accessible, and deployable solutions is a constant challenge. Proprietary models often come with steep costs and limited transparency, while many open-source alternatives may lack the scale or specialized capabilities needed for advanced applications.

Enter Kimi K2, a groundbreaking open Mixture-of-Experts (MoE) language model publicly released by Moonshot AI in July 2025. This model marks a significant leap for open-source AI, particularly from China, by combining immense scale—a trillion total parameters with only 32 billion “activated” per inference—with a core design focused on accessible deployment and agentic intelligence. Kimi K2 is poised to redefine how organizations approach autonomous AI systems, offering unparalleled opportunities for innovation and customization.

Understanding Kimi K2: A Paradigm Shift in Open-Weight Models

Kimi K2 is not just another large language model; it represents a strategic move towards democratizing highly capable, autonomous AI agents. Its release signifies a pivotal moment, providing both the raw capacity for state-of-the-art research and practical, production-ready pathways for real-world autonomous systems. This positions it not only as a technical achievement but as a catalyst for the next generation of open and customizable AI infrastructure.

Technical Innovations Behind Kimi K2’s Power

Kimi K2’s impressive capabilities stem from several key architectural and design choices that prioritize both performance and efficiency.

Mixture-of-Experts (MoE) Architecture

At its core, Kimi K2 leverages a sophisticated Mixture-of-Experts (MoE) design. This architecture is a game-changer for large-scale models, allowing for a trillion-parameter capacity while only activating a fraction of those parameters—specifically, 32 billion—during any given inference run. This unique approach provides:

- Massive Capacity: The model can learn from an enormous dataset and capture intricate patterns due to its vast total parameter count.

- Computational Efficiency: By activating only a subset of “experts” for each input, the computational cost per inference is dramatically reduced compared to a fully dense model of similar overall capacity. This makes large-scale model deployment more feasible.

- Scalability: MoE models are inherently scalable, allowing for the integration of more specialized experts as the model evolves without a proportional increase in runtime inference costs.

Kimi-K2-Base vs. Kimi-K2-Instruct

Moonshot AI has released Kimi K2 in two distinct variants, each tailored for specific use cases:

- Kimi-K2-Base: This foundational model is designed for researchers and developers who require a robust base for fine-tuning or adapting to specific tasks. It provides a powerful starting point for creating custom chatbots, specialized research tools, or vertical-specific AI applications. The open availability of this Base model grants organizations and academic labs unprecedented freedom to experiment with large-scale fine-tuning, custom verticals, and even base-variant ecosystem forks—a feat rarely possible with previous trillion-parameter models.

- Kimi-K2-Instruct: This variant is instruction-tuned, making it immediately ready for general-purpose deployment in chat applications or complex agentic tasks. It is optimized for following user directions with minimal latency, making it ideal for interactive AI systems.

Benchmarking Excellence: Coding, Math, and Agentic Tasks

Kimi K2 has set new performance benchmarks across major open evaluation suites, demonstrating its prowess in critical areas. It particularly excels in:

- Coding: Generating and understanding code, vital for software development automation.

- Math: Solving complex mathematical problems, crucial for scientific and financial applications.

- Agentic (“do, not just say”) tasks: This is where Kimi K2 truly shines. Unlike models that merely describe actions, agentic models are designed to execute tasks and achieve goals, making Kimi K2 a powerful tool for building autonomous systems. Its architecture is purpose-built for agentic workflows, positioning it as a potential backbone for end-to-end automated systems in enterprise, research, finance, and more.

It has achieved top scores on SWE Bench Verified, Tau2, and AceBench, solidifying its position as a leading open-weight model for complex problem-solving.

Bar chart comparing the performance of various AI models across multiple benchmarks in three categories: Agentic and Competitive Coding, Tool Use, and Math & STEM. Models include Grok-1.5 (K), Claude 3 Sonnet, Claude 3 Haiku, GPT-4, GPT-3.5, and Gemini. Grok-1.5 leads in most benchmarks, including SWE-bench Verified, SWE-bench Multilingual, LiveCodeBench v6, Tau2-bench, AceBench, AIME 2025, and GPQA-Diamond. Each benchmark displays model scores as vertical bars with numeric values, showing relative strengths across tasks like coding, tool use, and mathematics.

Long-Context Reasoning Capabilities

A significant design focus for Kimi K2 was long-context reasoning. This engineering choice enhances its ability to handle and understand extended documents or complex agent flows. While some reviewers note it can be verbose on highly challenging problems, its capacity for processing and reasoning over vast amounts of information is a critical advantage for applications requiring deep understanding of lengthy texts, such as legal analysis, research synthesis, or detailed process automation.

Deployment and Accessibility: Bringing Kimi K2 to Life

One of Kimi K2’s most compelling features is its commitment to open access and flexible deployment, making this powerful model available to a wide range of users and organizations.

Open-Weight and Permissive Licensing

Kimi K2 is available under a permissive, modified MIT license. This open-weight approach is a strategic move to democratize the development of highly capable, autonomous AI agents, as highlighted by APIDog. A key stipulation for very large deployments (over 100 million monthly active users or $20 million in annual revenue) is the requirement for the “Kimi K2” name to appear prominently in the user interface. This strikes a balance between broad accessibility and recognition for Moonshot AI’s contribution.

Self-Hosting and Infrastructure Requirements

For organizations prioritizing data privacy, customizability, or low latency, self-hosting Kimi K2 is a viable option. The model checkpoints are distributed in an efficient block-fp8 format, designed for rapid deployment across various high-performance inference frameworks, including:

- vLLM

- SGLang

- KTransformers

- TensorRT-LLM

This broad support makes both cloud and on-premises use practical. However, due to its scale, running production-scale Kimi K2 locally demands significant hardware resources. Typically, this requires multiple NVIDIA B200 GPUs or multi-node Hopper setups. For more accessible deployment, a quantized 4-bit version has reportedly demonstrated the ability to run on two Apple M3 Ultras with 512GB RAM, signaling a future where massive models can run at the edge or on-premises. This push toward efficient quantization broadens the real-world applicability for privacy-focused or latency-sensitive use cases, offering a significant advantage for businesses exploring private AI agents. For more on optimizing AI model deployment costs, you can read our guide on Cost-Efficient AI Deployment.

API Access for Cloud Deployment

For developers and businesses preferring a managed service, an OpenAI-compatible API is available via Moonshot AI’s platform. This API offers competitive pricing structures, differentiating between cache hits, misses, and output tokens to optimize cost efficiency. This flexibility allows users to choose the deployment method that best suits their infrastructure, budget, and operational needs.

Strategic Impact and the Future of Agentic AI

Kimi K2’s release is more than just a technical achievement; it has profound strategic implications for the global AI ecosystem, particularly in the realm of open-source and agentic AI.

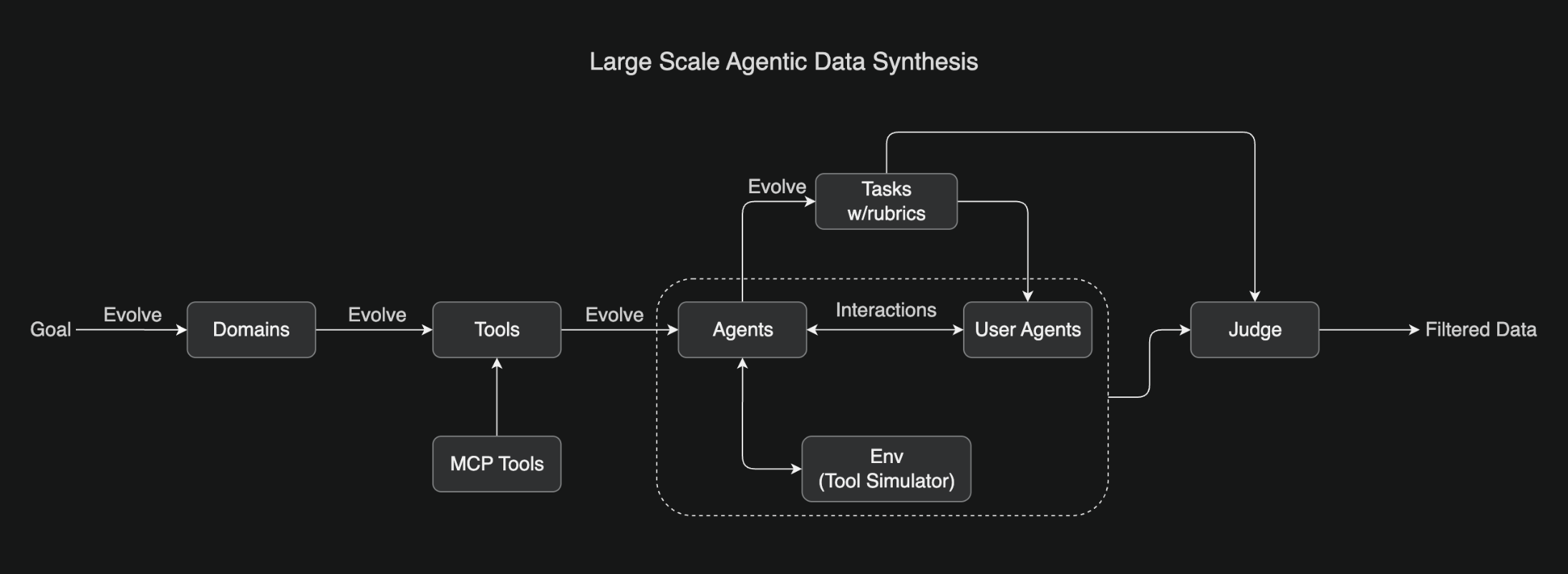

Flowchart titled “Large Scale Agentic Data Synthesis” illustrating the pipeline from a goal to filtered data. The flow starts with a Goal, which evolves into Domains, then into Tools (with support from MCP Tools), and then into Agents. Agents evolve alongside Tasks w/ rubrics, and interact with User Agents within an Environment (Tool Simulator). These interactions are judged by a Judge, which uses the tasks and outputs to produce Filtered Data. The chart includes iterative feedback loops showing evolution and refinement of each component.

A Landmark for Open-Source AI from China

Following the release of other significant models like DeepSeek, Kimi K2 is regarded by The Decoder as “the next open-weight model breakthrough from China”. It pushes the boundaries for publicly available agentic models, demonstrating China’s growing influence and commitment to contributing to the global open-source AI community. This increased competition and collaboration foster rapid innovation across the industry.

Democratizing Agentic AI Development

By releasing a trillion-parameter agentic model under a permissive license and ensuring robust open access, Moonshot AI positions itself as a key enabler for both grassroots and enterprise-scale AI innovation globally. This democratization means that smaller teams, startups, and academic institutions can now access and build upon a foundation model that was previously only available to a select few with immense computational resources. This aligns with the broader movement towards accessible AI, similar to how tools like the Gemini CLI Empower Developers with AI Command Line.

Paving the Way for Advanced Agentic Ecosystems

Kimi K2’s architecture is purpose-built for agentic workflows. This means it is uniquely primed for building autonomous agents capable of executing complex tasks, not merely answering questions. Such capabilities are crucial for developing end-to-end automated systems across various sectors:

- Enterprise: Automating complex business processes, from customer service to supply chain management.

- Research: Conducting autonomous literature reviews, experimental design, and data analysis.

- Finance: Developing sophisticated trading agents, fraud detection systems, and market analysis tools.

- Healthcare: Assisting with diagnostics, treatment planning, and administrative tasks.

The model’s focus on long-form reasoning and “thinking” further enhances its utility as a foundational component for increasingly sophisticated autonomous systems.

Unleashing Large-Scale Fine-Tuning

The open availability of the Kimi-K2-Base model provides an unprecedented opportunity for organizations and academic labs to engage in large-scale fine-tuning. This allows for:

- Custom Verticals: Developing highly specialized AI models for niche industries or unique business requirements.

- Domain-Specific Expertise: Training Kimi K2 on proprietary datasets to achieve unparalleled performance in specific domains, such as legal tech, medical research, or financial modeling.

- Ecosystem Forks: Fostering independent development and innovation, leading to diverse applications built upon Kimi K2’s robust foundation.

Edge and On-Premise AI Revolution

The focus on efficient quantization, exemplified by the ability to run a 4-bit version on Apple M3 Ultras, signals a significant shift towards making massive AI models viable for deployment at the edge or on-premises. This is crucial for:

- Privacy-Focused Applications: Keeping sensitive data within an organization’s secure infrastructure, avoiding reliance on external cloud services.

- Latency-Sensitive Use Cases: Ensuring real-time responsiveness for applications where every millisecond counts, such as autonomous vehicles or industrial automation.

- Reduced Cloud Costs: Lowering operational expenditures by moving compute closer to the data source, an increasingly important consideration for large-scale AI adoption.

This development aligns with the growing interest in private infrastructure for AI, enabling greater control and security.

Conclusion

Kimi K2 represents a monumental achievement in the realm of open-source artificial intelligence. With its innovative Mixture-of-Experts architecture, dual-variant offering, exceptional performance in agentic tasks, and commitment to open access and versatile deployment, Moonshot AI has provided the global community with a powerful new tool. Kimi K2 is poised to accelerate the development of autonomous AI systems, democratize access to advanced models, and catalyze innovation across industries. Its release is not just a technical milestone but a strategic enabler for the next generation of customizable and production-ready AI infrastructure.

Discover how Synthetic Labs can help you integrate and optimize cutting-edge AI models like Kimi K2 into your private infrastructure. Read our complete guide on private AI agents for more insights.

FAQ

- What is Kimi K2 and what makes it unique?

- Kimi K2 is Moonshot AI’s open Mixture-of-Experts (MoE) language model with a trillion total parameters, uniquely activating only 32 billion per inference for efficiency. Its focus on agentic tasks, combined with an open-weight, permissive license, makes it a groundbreaking tool for autonomous AI system development and democratization.

- Can Kimi K2 be self-hosted, and what are the hardware requirements?

- Yes, Kimi K2 can be self-hosted. For production-scale use, it typically requires multiple NVIDIA B200 GPUs or multi-node Hopper setups. However, a quantized 4-bit version has demonstrated the ability to run on more accessible hardware like two Apple M3 Ultras with 512GB RAM, expanding its deployment options.

- How does Kimi K2 contribute to the open-source AI community?

- Kimi K2 is released under a permissive, modified MIT license, making a trillion-parameter agentic model openly accessible. This significantly democratizes advanced AI development, allows for large-scale fine-tuning by academic institutions and startups, and solidifies China’s role as a major contributor to the global open-source AI ecosystem, fostering competition and innovation.